2018 Assembly Projects

THE 2018 ASSEMBLY COHORT came together to work on the challenge of artificial intelligence and its governance. Over four months, Assemblers took part in a rigorous two week design thinking and team building sprint, participated in a spring term course -- Ethics of AI -- co-taught by Jonathan Zittrain and Joi Ito, and developed their projects throughout the three month development period.

Projects focused on helping cities and communities, like AI Policy Pulse and AI in the Loop, on tackling the problems starting at the dataset like the Nutrition Label for Datasets, on analyzing and discussing current practices in AI such as equalAIs and Project Ordo. Finally, f[AI]r Startups aims to help companies implement ethical AI.

Read more below about the diverse set of projects developed throughout the course of the Assembly 2018 program.

The AI Policy Pulse team worked on a playbook for cities looking to build or buy AI technology. We focused on emerging questions, common challenges, and best practices to consider, via case studies from across North America.

We interviewed dozens of city builders, policymakers, and technologists as input for the project. Our project highlighted top questions that cities should be asking themselves when considering AI or other predictive, automated decision making in a city space.

: Kathy Pham

AI in the Loop

: Kathy Pham

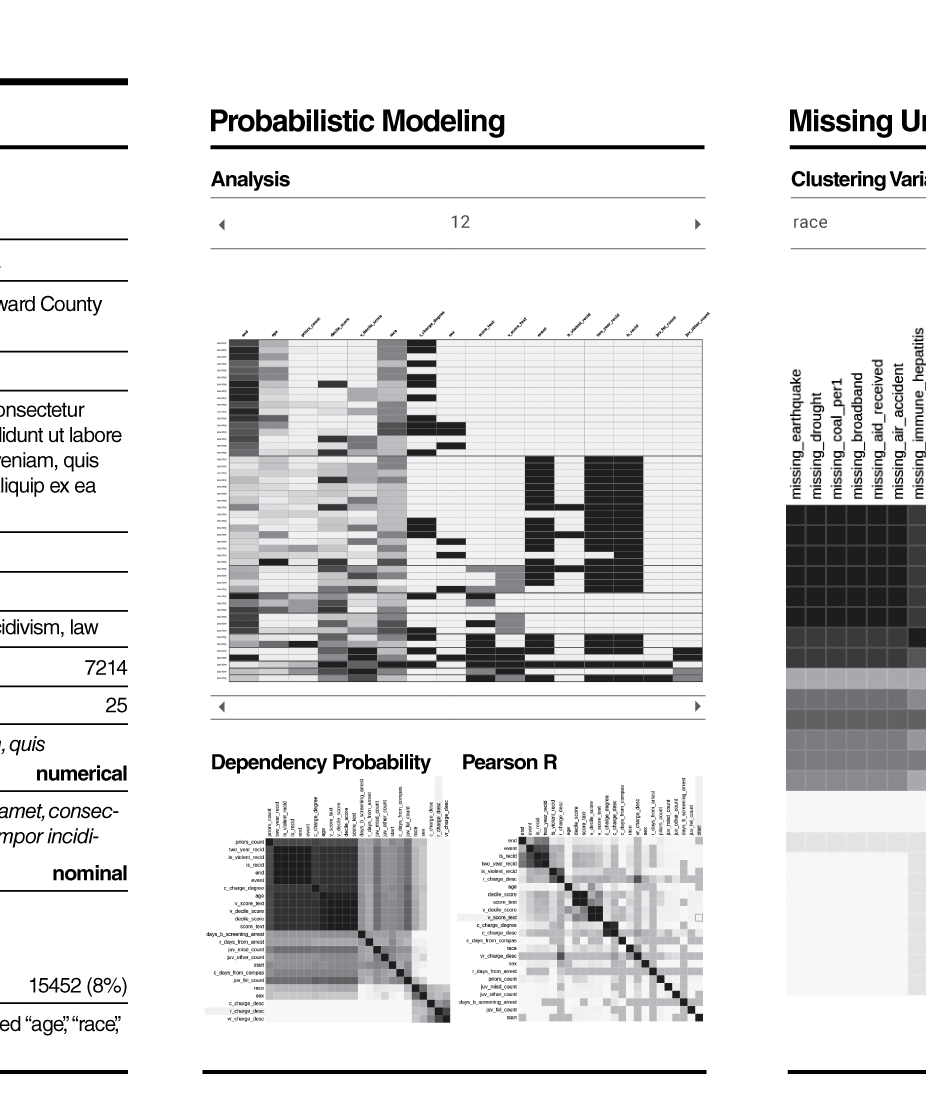

Algorithms matter, and so does the data they're trained on. One way to improve the accuracy and fairness of algorithms that determine everything from navigation directions to mortgage approvals, is to make it easier for practitioners to quickly assess the viability and fitness of datasets they intend to use to train their AI algorithms.

The Nutrition Label for Datasets aims to drive higher data standards through the creation of a diagnostic label that highlights and summarizes important characteristics in a dataset. Similar to a nutrition label on food, our label aims to identify the key ingredients in a dataset including bu not limited to metadata, diagnostic statistics, the data genealogy, and anomalous distributions. We provide a single place where developers can get a quick overview of the data before building a model, ideally raising awareness around the bias of the data within the context of ethical model building.

Our prototype, built on the Dollars for Docs dataset from the Centers of Medicare and Medicaid (CMS) and generously made available to us by ProPublica, presents a number of modules that span qualitative and quantitative information. It also makes use of the probabilistic computing tool BayesDB. You can learn more about the continuation of our work on our website or contact us at nutrition@media.mit.edu.

: Kathy Pham

Project Ordö takes inspiration from history, biology, and artificial intelligence to help train autonomous vehicles (AVs) to be safe and welcoming for the members of each community the enter.

Anybody's accident is everybody's accident.We proposed a framework for blameless accident reporting closely modeled after the field of Aviation where commercial flight accidents were brought to 0 in 2017.

We created tools to help vehicles make more informed decisions by introducing the notions of 'Common Sense' and 'Community Sense.' This allows cities, civic organizations, activists and conscious citizens to contribute their knowledge and be active participants in the way the complex AI systems in each vehicle make decisions.

We proposed a Driving School for AVs - a certification process that is agnostic from the technology, and a new epistemological category of AI Sherpa workers who could utilize Project Ordo to help autonomous vehicles learn to drive responsibly.

f[AI]r startups aimed to educate founders, investors, mentors, and accelerators about how startups can and should build AI ethically from the earliest stages of product development, without significant cost or distraction from the company vision.

We know that small companies building AI may not have the resources to address ethical questions on their own. How can we help early-stage founders and product teams address ethics and social biases in their product development cycles early on? What would it take to make ethics, fairness, and accountability a core value among startups when developing new products?

To help make this a reality, f[AI]r startups proposed to host workshops, events, and resources for startups about building tech ethically. The goal was to create a community of AI practitioners and start a movement to bring ethical AI to the forefront of the startup ecosystem.